1. World Modeling for Control and Perception

Cognitive and developmental robotics is a research field that aims to build robots developing through interactions with their environments like human children. This is of the dreams in robotics. An autonomous cognitive system should be able to learn and adapt to its environment through interactions. Notably, the robots’ experience that is the basis of adaptation and learning should be from their sensorimotor systems. Creating cognitive dynamics that allow a robot to develop and learn based on the robot’s actions and perception cycles is a critical challenge in cognitive and developmental robotics. The autonomous learning process that occurs throughout development is also referred to as lifelong learning, and it is thought to be the foundation for the development of social capabilities necessary for adaptive collaborative robots.

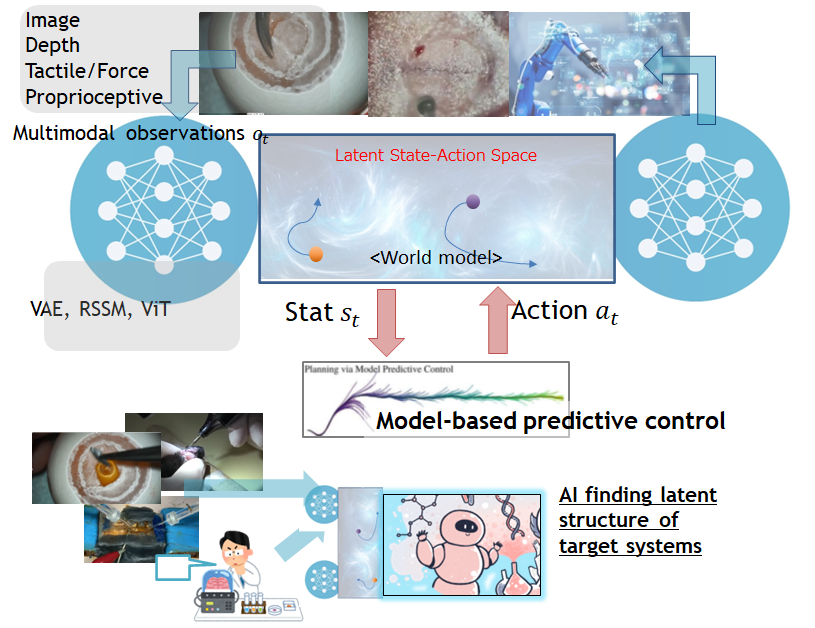

Based on outstanding success in deep learning and probabilistic generative models in the 2010s, world models are attracting attention in artificial intelligence. A cognitive system (e.g., an agent) that learns a world model, with itself included, will be capable of predicting its future sensory observations and optimizing its controller (i.e., behavior) based on the prediction of the sensory consequences of its actions. The idea is closely related to predictive coding that has been studied in neurorobotics to develop neuro-dynamical systems to enable robots to acquire adaptive behaviors and social perception. Predictive coding and world models share the same fundamental idea with the free-energy principle, which has become an influential theory in neuroscience in recent years.

The world model-based approach is promising. However, many applications and studies of world models tend to be limited to simulation studies. The challenges of developing autonomous cognitive-developmental robots based on world models, predictive coding, and the free-energy principle have not been fully tuckled in real robots. These approaches are based on a generative view of cognition. In studies about cognitive development and symbol emergence in robotics, many computational cognitive models based on probabilistic generative models have been developed in studies about cognitive development and symbol emergence in robotics.

This research project focuses on theoretical and practical studies that enable us to create real-world embodied cognitive systems based on a world model-based approach.

Publication

-

Karl Friston, Rosalyn J. Moran, Yukie Nagai, Tadahiro Taniguchi, Hiroaki Gomi, Josh Tenenbaum, World model learning and inference, Neural Networks, 144(-), 573-590, 2021. https://doi.org/10.1016/j.neunet.2021.09.011

-

Masashi Okada, Tadahiro Taniguchi, Variational Inference MPC for Bayesian Model-based Reinforcement Learning, Conference on Robot Learning (CoRL) , 2019, paper

-

Masashi Okada, Norio Kosaka, Tadahiro Taniguchi, PlaNet of the Bayesians: Reconsidering and Improving Deep Planning Network by Incorporating Bayesian Inference, IEEE International Conference on Intelligent Robots and Systems (IROS), 2020, paper

-

Masashi Okada, Tadahiro Taniguchi, Dreaming: Model-based Reinforcement Learning by Latent Imagination without Reconstruction, IEEE International Conference on Robotics and Automation (ICRA) , 2021, paper

2. Whole-Brain Probabilistic Generative Models for developing Cognitive Architecture and Artificial General Intelligence

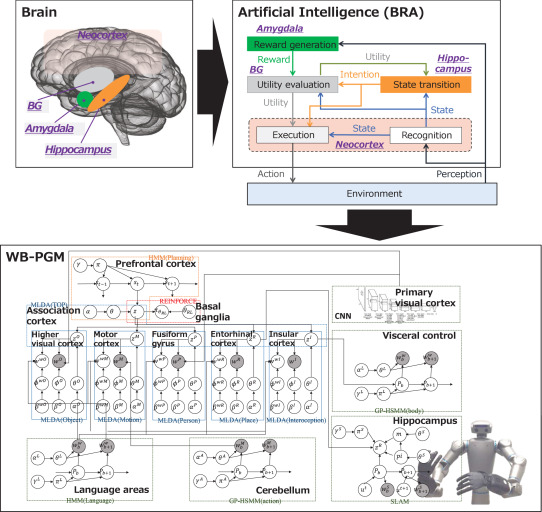

Building a human-like integrative artificial cognitive system, that is, an artificial general intelligence (AGI), is the holy grail of the artificial intelligence (AI) field. Furthermore, a computational model that enables an artificial system to achieve cognitive development will be an excellent reference for brain and cognitive science. Taniguchi et al. [1] describe an approach to developing a cognitive architecture by integrating elemental cognitive modules to enable the training of the cognitive architecture as a whole. This approach is based on two ideas: (1) a brain-inspired AI which is built by learning the human brain architecture to build human-level intelligence by researchers, and (2) a probabilistic generative model (PGM)-based cognitive architecture to develop a cognitive system for developmental robots by integrating PGMs. The proposed development framework is called a whole-brain PGM (WB-PGM), which differs fundamentally from existing cognitive architectures as it can learn continuously through a system based on sensory-motor information.

Because on the characteristics that PGMs describe explicit informational relationships between variables, WB-PGM provides interpretable guidance from computational sciences to neuroscience. By providing such information, researchers in neuroscience can provide feedback to researchers in AI and robotics on what the current models lack in reference to the brain. Furthermore, it can facilitate collaboration between researchers in neuro-cognitive sciences and those in AI and robotics.

Publication

- Tadahiro Taniguchi, Hiroshi Yamakawa, Takayuki Nagai, Kenji Doya, Masamichi Sakagami, Masahiro Suzuki, Tomoaki Nakamura, Akira Taniguchi, A whole brain probabilistic generative model: Toward realizing cognitive architectures for developmental robots, Neural Networks, 150(-), 293-312, 2022. https://doi.org/10.1016/j.neunet.2022.02.026

- Tadahiro Taniguchi, Tomoaki Nakamura, Masahiro Suzuki, Ryo Kuniyasu, Kaede Hayashi, Akira Taniguchi, Takato Horii, Takayuki Nagai, Neuro-SERKET: Development of Integrative Cognitive System through the Composition of Deep Probabilistic Generative Models, New Generation Computing, 38(-), 23-48, 2020. https://doi.org/10.1007/s00354-019-00084-w

- Tomoaki Nakamura, Takayuki Nagai, Tadahiro Taniguchi, SERKET: An Architecture For Connecting Stochastic Models to Realize a Large-Scale Cognitive Model, Frontiers in Neurorobotics, 12(-), 25, 2018. DOI: 10.3389/fnbot.2018.00025